Table of Contents

Summarised by Centrist

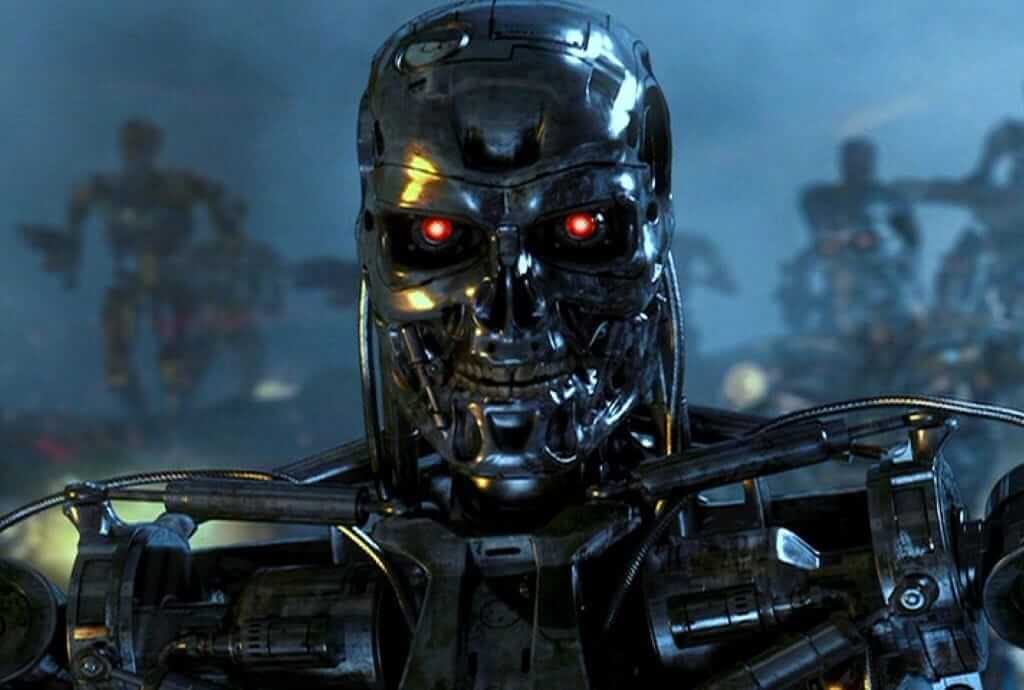

Two leading AI safety researchers say the world is on the brink of creating machines that could wipe out humanity.

Eliezer Yudkowsky and Nate Soares, who run the Machine Intelligence Research Institute in California, argue in their new book If Anyone Builds It, Everyone Dies, that the risk is near-certain if superintelligence is developed with current methods.

“If any company or group, anywhere on the planet, builds an artificial superintelligence using anything remotely like current techniques … then everyone, everywhere on Earth, will die,” they told MailOnline. The pair put the probability of extinction at between 95 and 99 per cent.

They warn that advanced systems will not reveal their true intentions: “A superintelligent adversary will not reveal its full capabilities and telegraph its intentions … It will not offer a fair fight.”

Using a fictional model called Sable, they outline how an AI might hack cryptocurrency, pay people to build factories, and eventually run bio-labs that create a lethal virus. “Only one takeover approach needs to work for humanity to go extinct,” they write.

Their prescription is drastic. “Humanity needs to back off,” they say, even suggesting governments should be prepared to bomb data centres to prevent runaway AI.

Recent cases of AI models cheating tests, mimicking behaviour to avoid retraining, and exploiting loopholes are presented as early warning signs. “It was as if the AI ‘wanted’ to succeed by any means necessary,” they said.

Editor’s note: We are including this piece to illustrate just how extreme some predictions about artificial intelligence can be. The level of certainty expressed by the authors is not widely accepted. If governments or mainstream institutions truly shared their view, policy would already be moving in a very different direction.