Table of Contents

Robert Malone

Robert W Malone is a physician and biochemist. His work focuses on mRNA technology, pharmaceuticals, and drug repurposing research.

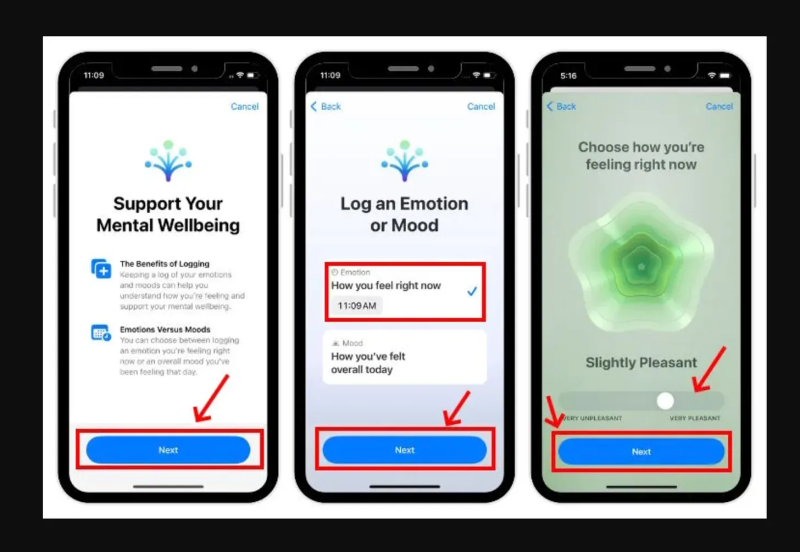

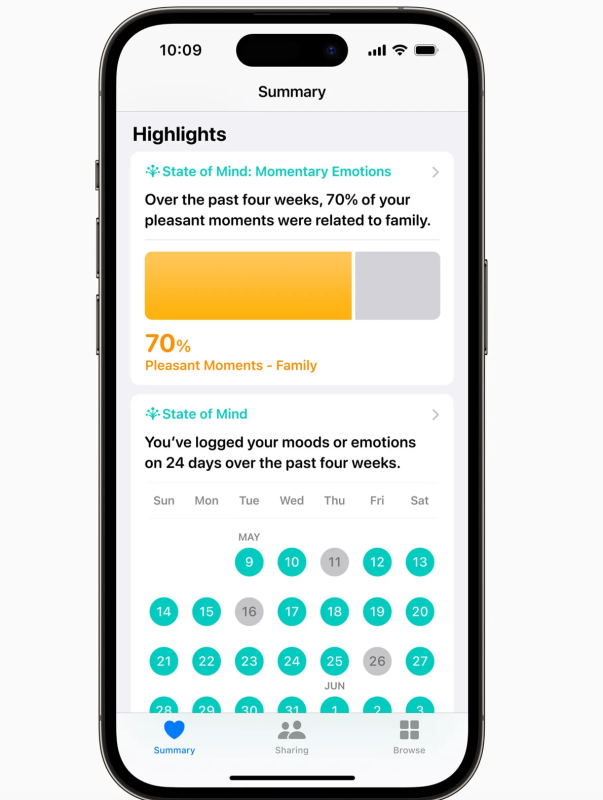

True Story: The health app built into iPhones is now collecting as much personal information on the mental health of each and every one of us as they can get a hold of.

Yet, a search on Google and Brave yielded no results on the dangers of sharing such information over the phone or the internet. Seriously, no single MSM has done an article on why such data sharing might be a bad idea?

To start, in sharing such data, you aren’t just sharing your information; iPhone knows exactly who your family members are. In many cases, those phones are connected via family plans.

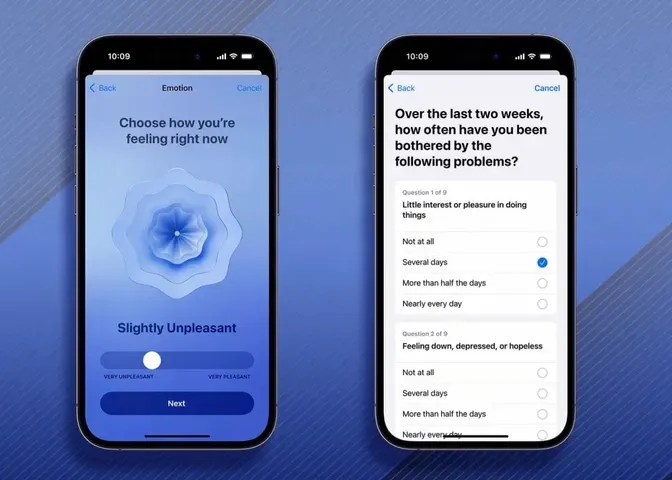

iPhone mental health assessments not only ask questions about your mental health but can also infer the mental health status of family members, as demonstrated by the image publicly shared by phone on the benefits of a phone mental health assessment.

What could possibly go wrong?

Although the iPhone has historically been known to keep user data “safe,” this is not a given, and there have been hacks and data breaches over the years.

CrowdStrike happened – from just a simple coding error. In 2015, all of my confidential data given to the DoD and the FBI in order to get a security clearance was harvested by the Chinese government, when they hacked into the government’s “super-secret,” and “super-secure” government data storage site. In response, the government offered me a credit report and monitoring of my credit score for a year. Yeah – thanks.

The bottom line – no data is 100 per cent secure, and this is mental health data. Data that might be extremely embarrassing, career-damaging, or has the potential to disrupt family relationships. Remember, no one knows what new laws, regulations, or more might come to pass years from now. This type of information should not be harvested and stored.

Furthermore, trusting that Apple will never sell that data or pass it off to research groups is very naive. In fact, mental health data is already being mined.

Apple has partnered with various health organizations and academic institutions to conduct health-related studies, such as Harvard T H Chan School of Public Health, Brigham and Women’s Hospital and the University of Michigan, on various health studies. Apple also collaborates with the University of California, Los Angeles (UCLA) on a Digital Mental Health Study. This study likely utilizes data collected through Apple devices to investigate mental health patterns and outcomes. Trusting that the user identifiers have been completely stripped before that data is passed on is a risk that one takes when entering such information into an iPhone.

“Connect with resources.”

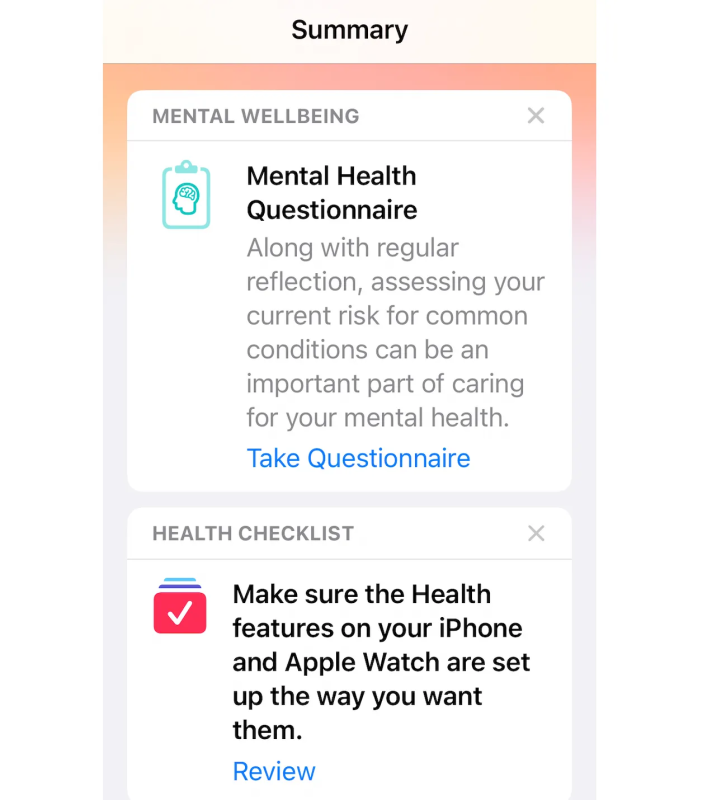

So how does Apple benefit? At this time, it appears that Apple is selling advertising of various mental health services by “connecting” services to people’s phones. Apple writes that “These assessments can help users determine their risk level, connect to resources available in their region, and create a PDF to share with their doctor.”

That might mean that if one presses the depressed button in the mental health assessment, Apple will place ads on the search engine for anti-depressants or physicians that prescribe them.

Why would that example be relevant, and which pharmaceutical companies might benefit?

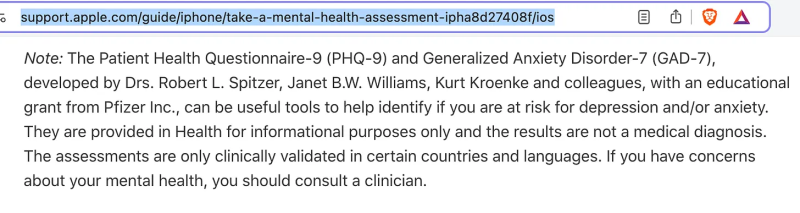

iPhone has developed their mental health assessment with an “educational grant” from Pfizer!

Pfizer manufactures and sells Zoloft, Effexor, Pristiq, and Sinequan formulations. Together the sales revenue for these drugs is in the billions each year:

From 2015 to 2018, 13.2 per cent of American adults reported taking antidepressant medication within the past 30 days, with sertraline (Zoloft) being one of the most common. Even off-patent, there were 39.2 million prescriptions filled with an annual sales revenue of 470 million.

Effexor XR is an antidepressant medication that was originally developed by Wyeth (now part of Pfizer). In 2010, when the first generic version of Effexor XR was introduced in the United States, the brand name product had annual sales of approximately $2.75 billion. By 2013, due to generic competition, Pfizer’s sales of Effexor XR dropped to $440 million.

According to IMS Health data, in 2016, Pristiq (desvenlafaxine) had annual sales of approximately $883 million in the United States, although sales appear to have fluctuated over the years.

The bottom line is that Pfizer is not supplying educational grants to develop mental health assessment software for Apple out of the “goodness of their heart.” Mental health inventions via medication are a big business, and these companies are looking to profit.

This is just one way that Apple is using surveillance capitalism by data mining mental health status and then selling access to that data to Big Pharma, Big Tech, physician and insurance companies, etc.

How this information, which once released or leaked, can never be returned with privacies intact, will be used in the future is unknown.

If the setting for sharing research on health conditions has not been deactivated, this information will go into a database somewhere. It is only Apple’s assurance that your identification has been stripped from the data. Further, your mental health information will be uploaded to the cloud and will be used as a behavioral future. To be shared, packaged, sold, used to influence your decision-making, etc.

I am wary of the highly profitable industry that has been built up around “mental disorders.” Over the years, the American Psychiatric Association and the fields of psychology and psychiatry have hurt individuals and families by both classifying diseases and disorders incorrectly and by developing treatments and therapies that were and are dangerous. Many are still in use. Here are a few examples:

- There are estimates that 50,000 lobotomies were performed in the United States, with most occurring between 1949 and 1952. In 1949, the Nobel Prize in Physiology or Medicine was awarded to António Egas Moniz for his development of the lobotomy procedure.

- Homosexuality was classified as a mental disorder in 1952 with the publication of the first Diagnostic and Statistical Manual of Mental Disorders (DSM-I) by the American Psychiatric Association (APA). It was listed under “sociopathic personality disturbance.” This classification remained in place until 1973.

- Selective Serotonin Reuptake Inhibitors (SSRIs) are a widely prescribed class of antidepressant medications. There is a link between SSRIs and increased risk of violent crime convictions, and other research has shown an increase in self-harm and aggression in children and adolescents taking SSRIs.

- The APA supports access to affirming and supportive treatment for “transgendered” children, including mental health services, puberty suppression and medical transition support.

- The APA believes that gender identity develops in early childhood, and some children may not identify with their assigned sex at birth.

- Asperger’s syndrome was merged into the broader category of autism spectrum disorder (ASD) in the DSM-5 in 2013. Since then, a large number of people have faced discrimination and barriers to entry into higher-paying positions. Children handed such a diagnosis also can suffer a lack of confidence in their ability to manage relationships effectively, which can easily carry on to adulthood.

These are just a few of the many, many ways the American Psychiatric Association and the fields of psychology and psychiatry have gotten things very wrong.

- A survey of more than 500 social and personality psychologists published in 2012 found that only six per cent identified as conservative overall, implying that 94 per cent were liberal or moderate.

- At a 2011 Society for Personality and Social Psychology annual meeting, when attendees were asked to identify their political views, only three hands out of about a thousand went up for “conservative” or on the right.

The liberal bias in psychology influences findings on conservative behaviors.

To bring this back to the use of software applications and the iPhone mental health app in particular, be aware that these software programs are being developed by people with a liberal bias and who will view the beliefs of conservatives negatively. What this means for future use of this data is unknown, but it can’t be good.

If you do choose to use the mental health applications, which mainstream media has nothing but praise for, be aware – there are alligators in those waters.

But be sure that the data sharing mode, particularly giving data access to researchers is turned off. But even then, don’t be surprised if your phone begins planting messages about the benefits of SSRIs, or other anti-depressant drugs, into your everyday searches. Or maybe your alcohol-use or tobacco-use data will be used to supply you with advertisements on the latest ways to reduce intake or how to find a good mental-health facility. These messages may very well include neuro-linguistic programming methods and nudging, to push you into treatment modalities. But, honestly, it is all for your “own good and for the benefit of your family.”

Now, the iPhone and the watch can be valuable tools. The EKG, blood oxygen, and heart rate monitoring are fantastic tools for those who suffer from cardiac disorders. I have found them to be extremely helpful.

My wife, Jill is often motivated by getting more walking steps each week via her iWatch tracker.

Just be aware that these programs can be invasive. Data is never 100 per cent secure and it is being used. We just don’t know all the details.

Republished from the author’s Substack by the Brownstone Institute.